Senior thesis: multi-armed bandits for robotic field estimation task

The problem of field estimation, or finding the spatial distribution of a resource in space, has many applications in problems of robotic search. In my thesis, advised by Prof. Naomi Leonard, I approached the problem in the framework of a Gaussian Multi-Armed Bandit (MAB) task, a problem in which an agent must learn about an unknown environment while maximizing expected reward. The arms correspond to discretized points in space, and the smoothness of the field is modeled as spatial correlation between the arms. To address this problem, I used the Upper Confidence Limit (UCL) algorithm, which was developed by Reverdy et al. in 2014 for Gaussian MAB problems with correlated arm rewards and prior knowledge.

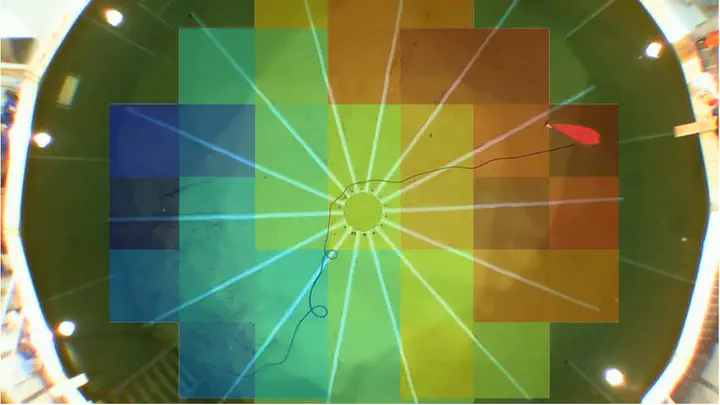

The smoothness of the field is measured by a parameter known as the length scale. In real world applications, the agent can only have an estimate of this length scale. I explored the performance of UCL with correlation in comparison to other algorithms when the estimate of the length scale is correct. I then explored the effect of overestimates and underestimates in the length scale for fields of varying smoothness. Finally, I implemented the search task in a testbed with a robot, giving additional metrics of performance.

The simulations showed that knowledge of the spatial correlation of the arms can result in improvements in performance using UCL when compared to other algorithms that do not account for correlation. In addition, I observed that the best performance is not actually obtained for the correct length scale estimate, but for some particular overestimate. This effect must be studied further, but the results suggest that an agent performing this search should use an overestimate and not an underestimate of the length scale that describes the field, provided this overestimate is not grossly inaccurate.